I asked for examples of things that are Poisson distributed in class. One student said the number of chocolate chips in a cookie are Poisson distributed. He’s right.

Here is the intuition of when you have a Poisson distribution. First, you should have a counting process where you are interested in the total number of events that occur by time t or in space s. If each of these events is independent of the others, then the result is a Poisson distribution.

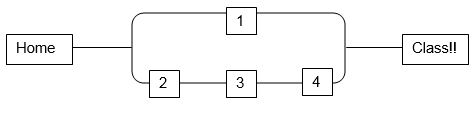

Let’s consider the Poisson process properties of a chocolate chip cookie. Let N(t) denote the number of chocolate chips in a cookie of size t. N(t) is a Poisson process with rate y if all four of the following events are true:

1) The cookie has stationary increments, where the number of chocolate chips in a cookie is proportional to the size of the cookie. In other words, a cookie with twice as much dough should have twice as many chocolate chips (N(t) ~ Poisson (y*t)). That is a reasonable assumption.

2) The cookie has independent increments. The number of chocolate chips in a cookie does not affect the number of chocolate chip cookies in the next cookie.

3) A cookie without any dough cannot have any chocolate chips (N(0)=0)).

4) The probability of finding two or more chocolate chips in a cookie of size h is o(h). In other words, you will find at most one chocolate chip in a tiny amount of dough.

All of these assumptions appear to be true, at least in a probabilistic sense. Technically there may be some dependence between chips if we note that bags of chocolate chips have a finite population (whatever is in the bag). There is some dependence between the number of chocolate chips in one cookie to the next if we note that how many chips we have used thus far gives us additional knowledge about how many chips are left. This would violate the independent increments assumption. However, the independence assumption is approximately true since the frequency of chocolate chips in the cookie you are eating is roughly independent of the frequency of chocolate chips in the cookies you have already eaten. As a result, I expect the Poisson is be an excellent approximation.

Picture courtesy of Betty Crocker